Building a data center is a monumental undertaking, and its success is almost entirely decided by how well you plan upfront. This isn't just about pouring concrete; it's about translating your business goals into a viable blueprint. We’re talking about a deep-dive needs assessment, razor-sharp site selection, and a rock-solid financial model that ensures your project has a firm foundation.

Laying the Groundwork for Your Data Center Build

Before a single shovel hits the dirt, the fate of your data center is largely sealed. This foundational stage is less about hardware and more about strategic foresight. It’s where you turn abstract business objectives into tangible requirements for capacity, power density, and the level of reliability you absolutely need to maintain.

Think of it like drawing up the plans for a skyscraper. If the blueprint is flawed, the entire structure is compromised. The decisions you make now will ripple through the entire lifecycle of the facility, impacting everything from long-term operational costs to its ability to scale with future technology.

Conducting a Thorough Needs Assessment

First things first: you have to define precisely what this facility needs to do. This goes way beyond just counting racks. A proper needs assessment means forecasting future growth, especially with power-hungry AI workloads becoming the norm.

To get this right, you have to answer some tough questions that will guide the entire project:

- Capacity Planning: What are your Day 1 power and space needs, and what does your five-year growth trajectory look like? I've seen too many projects fail because they were under-provisioned, leading to incredibly expensive retrofits down the road.

- Density Requirements: Are you supporting low-density storage or high-density compute nodes for AI and machine learning? This choice has a direct and significant impact on your cooling and power distribution design.

- Tier Level Classification: How much downtime can your business actually tolerate? Your decision between a Tier II, III, or IV facility will define your redundancy levels and, by extension, your budget. A Tier III design is concurrently maintainable, for instance, while a Tier IV is fully fault-tolerant.

During this early planning, it’s also smart to think about long-term sustainability. It's the right time to investigate strategies for reducing the environmental impact of data centers.

Strategic Site Selection and Due Diligence

Finding the right piece of land is arguably the single most critical decision you'll make. A great site is a perfect storm of favorable geography, robust infrastructure, and cooperative local government. Rushing this step is a recipe for crippling delays and operational nightmares.

Your due diligence checklist has to be exhaustive. We're talking about confirming power availability from multiple substations, ensuring proximity to diverse, low-latency fiber routes, and verifying geological stability. Avoiding floodplains and seismic zones is completely non-negotiable. Don’t overlook local permitting hurdles, either—a municipality with a streamlined approval process can easily shave months off your timeline. This is where partnering with experts in heavy civil construction pays dividends, giving you invaluable insight into site readiness.

A location that looks perfect on paper can be a complete dead-end if you can't secure power commitments from the local utility or if zoning laws are restrictive. Always engage with local authorities and utility providers before making any financial commitments.

Financial Modeling and Capital Investment

Let's be clear: building a data center requires a massive capital investment. The global data center construction market is valued at USD 239.0 billion in 2025 and is projected to skyrocket to USD 428.0 billion by 2035. Understanding this financial landscape is crucial for developing a realistic budget. It needs to cover not just construction and equipment but also long-term operational costs like power, staffing, and maintenance.

Your financial model should be granular, breaking down every anticipated cost:

- Land acquisition and site preparation

- Building construction and materials

- Mechanical and electrical plant (MEP) equipment

- Network infrastructure and security systems

- Commissioning and testing services

To help organize your thinking around this critical step, here's a checklist of the key factors you need to evaluate.

Data Center Site Selection Checklist

This table summarizes the critical factors we look at when evaluating a potential data center location. Missing just one of these can introduce significant risk to the project.

| Evaluation Category | Key Considerations | High-Priority Factors |

|---|---|---|

| Power Infrastructure | Availability from multiple substations, cost per kWh, utility provider reliability, future capacity expansion | Dual-feed power from separate grids; favorable long-term power purchase agreements (PPAs). |

| Network Connectivity | Proximity to multiple fiber carriers, latency to key markets, route diversity (avoiding single points of failure) | Access to at least three diverse fiber providers; low-latency routes to major internet exchanges. |

| Geographic & Environmental | Risk of natural disasters (floods, earthquakes, tornadoes), climate impact on cooling efficiency | Location outside of a 100-year floodplain; low seismic activity; moderate climate to reduce cooling costs. |

| Permitting & Zoning | Local zoning laws, political climate, entitlement process timeline, potential for community opposition | "By-right" zoning for data center use; a clear and predictable permitting process with local government support. |

| Site Accessibility | Proximity to major transportation routes (airports, highways), availability of skilled labor for construction and operations | Easy access for heavy equipment during construction; located within a reasonable commute for technical staff. |

Thoroughly vetting each of these areas is non-negotiable. The goal is to de-risk the project as much as possible before you invest the first dollar in the ground.

By meticulously planning these three pillars—needs, site, and budget—you establish a resilient foundation. This strategic groundwork is what positions your data center project for success from day one.

Designing for High-Density Power and Cooling

Power and cooling are the lifeblood of your data center. They are the dynamic duo that dictates not just performance but your long-term operational expenditures. A miscalculation here doesn't just create a budget headache; it can cripple your facility's ability to scale. This is where you move from architectural plans to the systems that keep the lights on and the servers from melting.

Getting this right starts with a brutally honest assessment of your power load. You need to calculate your Day 1 requirements with precision, then project your needs five years out. Don't forget to account for the explosive growth of high-density AI workloads—this foresight is non-negotiable.

We're in a boom cycle for data center construction, and power availability is often the single biggest bottleneck to growth in primary markets. Globally, 2023 saw an all-time high of 3,077.8 MW under construction, a jump of 46% year-over-year. This trend highlights just how critical it is to secure significant power from the grid from the outset, which is why many builders are now focusing on secondary markets with more available capacity.

Architecting Electrical Redundancy

Once you have your power forecast, the next challenge is designing a system that can survive disruptions. This is where redundancy models come into play, and your choice has massive implications for both uptime and cost.

- N+1 Redundancy: This is the workhorse of the industry. For every 'N' components you need, you have one extra (the '+1') on standby. If a UPS fails, the backup unit seamlessly takes over. It's a pragmatic balance of reliability and investment, making it suitable for most enterprise and colocation facilities.

- 2N Redundancy: This is the "belt and suspenders" approach. It provides a fully independent, mirrored infrastructure—two complete power systems running in parallel. This gives you fault tolerance against a complete system failure, but it comes at a significantly higher capital and operational cost.

Beyond just delivering power, you have to ensure its quality is pristine. Sensitive IT equipment can be slowly degraded or destroyed by electrical noise and fluctuations over time. It's essential to master electrical power quality diagnostics to prevent these silent killers. Uninterruptible power supplies (UPS) provide the first line of defense against grid sags and surges, while generators offer long-term backup during extended outages.

Modern Cooling Strategies for Dense Environments

With great power density comes great heat. The traditional air-cooling methods that worked for years are simply no match for modern server racks that can draw 30 kW or more. The game has changed, and the goal now is to remove heat with surgical precision. This is the key to achieving a low Power Usage Effectiveness (PUE) score.

The PUE metric is a simple but powerful ratio: Total Facility Energy / IT Equipment Energy. A perfect score is 1.0, but in the real world, anything between 1.2 and 1.5 is considered excellent for a modern facility.

Your cooling strategy is the single biggest variable you can control to lower your PUE. Shifting from brute-force air conditioning to targeted heat removal is the key to building an efficient data center that doesn't waste millions on energy.

Comparing Cooling Technologies

Choosing the right cooling approach depends entirely on your rack density, local climate, and budget. Here’s a quick rundown of the leading methods being used today.

| Cooling Method | Best For | Pros | Cons |

|---|---|---|---|

| Hot/Cold Aisle Containment | Low-to-medium density (5-15 kW/rack) | Simple, cost-effective, improves CRAC unit efficiency. | Limited effectiveness for high-density racks. |

| Rear Door Heat Exchangers | Medium-to-high density (15-30 kW/rack) | Captures heat directly at the source, cooling-neutral to the room. | Adds weight and complexity to each rack. |

| Direct-to-Chip Liquid Cooling | Very high density (>30 kW/rack) | Extremely efficient heat removal, enables maximum server performance. | Higher upfront cost and requires specialized plumbing. |

By implementing a strategy like aisle containment, you prevent hot and cold air from mixing, which drastically improves the efficiency of your Computer Room Air Conditioning (CRAC) units. But for hyperscale deployments running AI clusters, direct-to-chip liquid cooling is quickly becoming the standard. It uses circulated liquid to pull heat directly from processors, allowing for much greater server density without the risk of overheating. Adopting this kind of forward-thinking approach is fundamental to building a data center that's ready for the future.

From Blueprint to Reality: Managing Data Center Construction

With a finalized design and permits in hand, your project moves off the page and onto the job site. This is where the careful planning of the past few months collides with the gritty reality of construction. Success from here on out boils down to logistics, relentless coordination, and a deep appreciation for what makes a data center build so unique.

Let's be clear: this isn't your average commercial construction project. The tolerances are razor-thin, the integrated systems are incredibly complex, and a small mistake can have massive financial and operational consequences down the line.

Choosing Your Build Partners

This is arguably the most critical decision you'll make during the entire build phase. Your general contractor absolutely must have a proven track record with data centers. Don't just take their word for it—ask for case studies, talk to their former clients, and if possible, walk through a facility they’ve completed. A team that already understands the nuances of installing raised floors, routing miles of fiber, and coordinating the delivery of a 40-ton generator will save you from a world of expensive rookie mistakes.

Beyond the GC, you’ll need a roster of specialized subcontractors. The sheer scale and complexity of a modern data hall’s network infrastructure, for instance, requires true experts. Taking the time to properly evaluate various cable contractor companies is a vital step. The right partner ensures your physical network layer is flawless and ready to support high-speed traffic from day one.

A Lesson from the Field: One of the most common and costly pitfalls is underestimating lead times for critical equipment. We've seen projects stall because someone forgot that generators, switchgear, and large-scale UPS systems can have delivery timelines of 52 weeks or more. Ordering this long-lead gear must be one of the very first things you do once the design is locked.

Keeping the Project on Track

A detailed project plan with clear milestones is your command center. This isn’t a document you create and file away; it's a living, breathing tool you'll use daily to track progress and spot potential roadblocks before they derail your timeline. In this market, time is everything.

The global data center market is expected to hit US$527.46 billion in 2025, and with global vacancy rates dropping to a historic low of just 6.6%, the pressure to deliver is immense. Every single day of construction delay is a day of lost revenue opportunity. You can get more insights on these market dynamics and construction trends from this Data Center Knowledge report.

Here’s a look at the typical flow of major construction milestones:

- Site Prep and Foundation: Kicking things off with excavation, grading, and pouring the slab that will carry the weight of the entire facility.

- Going Vertical: The structural steel goes up, giving the building its skeleton and defining the physical space.

- Closing the Box: The walls and roof are installed. This "weather-tight" milestone is huge, as it allows the sensitive interior work to begin.

- The MEP Marathon: This is the most complex and time-consuming phase. It involves weaving together all the mechanical, electrical, and plumbing systems that are the lifeblood of the data center.

- The Fit-Out: With the core systems in, the focus shifts to installing raised floors, cable trays, and fire suppression, getting the data halls ready for the first racks to roll in.

The Permit and Inspection Gauntlet

Don't underestimate how badly the permitting and inspection process can slow you down if you aren't proactive. From the electrical rough-in to the final fire system test, every major step requires a sign-off from local authorities. A great project manager lives and breathes the inspection schedule, ensuring all work is done to code before the inspector even steps on site.

Failing an inspection isn't just a two-day setback. It creates a domino effect, pushing back the next trade in line and throwing your entire schedule into chaos. This is where building a good, transparent relationship with local officials pays dividends. Consistent communication can turn a potential adversary into an ally, helping you navigate the bureaucracy and keep the project moving forward.

Implementing Robust Security and Network Infrastructure

Think of a data center as the modern equivalent of a bank vault. Instead of gold, it protects an organization's most critical asset: its data. Securing this digital gold requires a strategy that’s as much about physical barriers as it is about firewalls. You have to build concentric circles of security, starting at the property line and drilling all the way down to the server rack.

At the same time, the network infrastructure is the facility's central nervous system. A bad network design creates bottlenecks that kill performance and torpedo reliability. Nailing the network foundation is just as critical as bolting down the physical space it runs through.

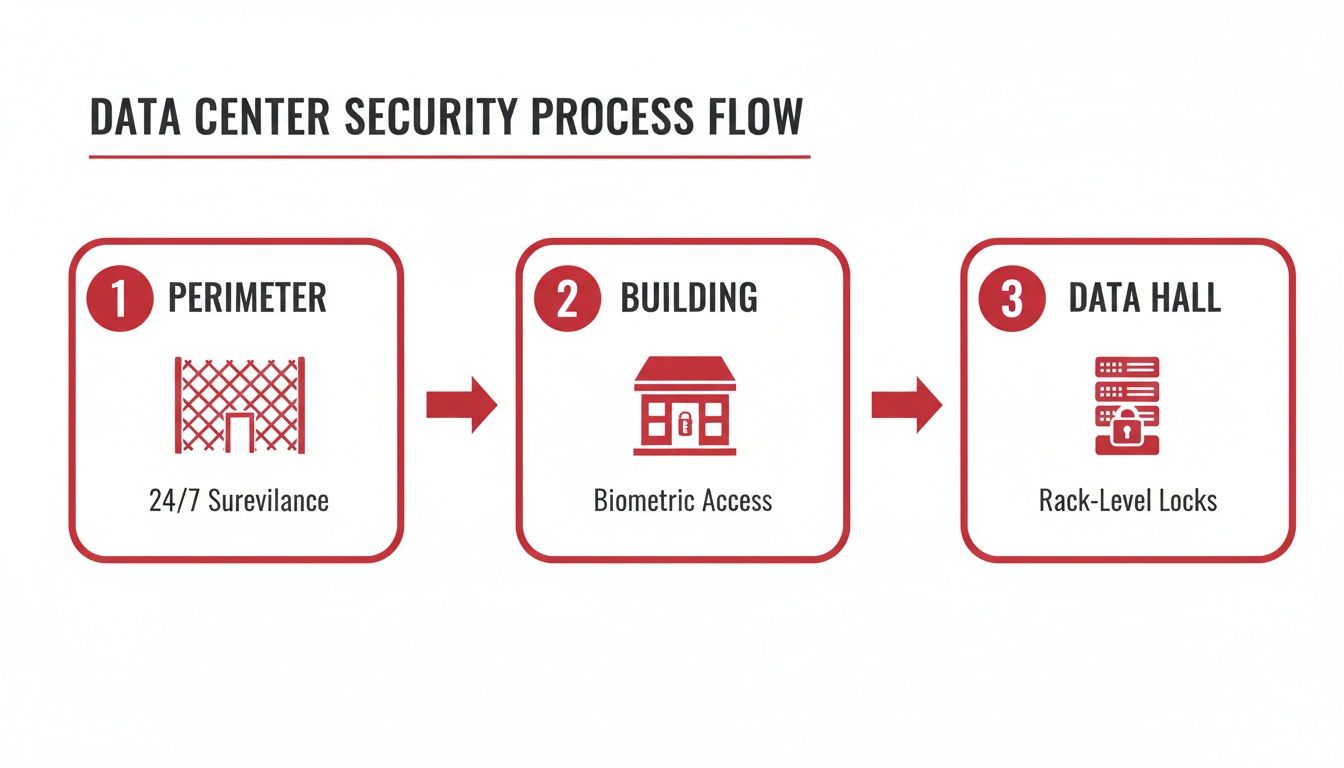

Layering Physical Security Measures

Good physical security isn’t about one single impenetrable wall; it’s about layers of deterrence and detection. The idea is to make getting to the data halls progressively harder with each step. It all starts with the perimeter—the first and arguably most important line of defense.

This outer layer needs more than just a fence. We're talking about serious perimeter control: vehicle crash barriers, comprehensive video surveillance covering every angle of approach, and controlled entry points. Once past the perimeter, getting into the building itself should require more than just a simple key card.

- Mantraps: I’m a big believer in these. They're basically small, secure vestibules with interlocking doors. You can't open the second door until the first one closes and you've been authenticated again. It’s the single best way to stop "tailgating," where someone slips in behind an authorized person.

- Biometric Access: For the truly sensitive areas like the data halls, biometric scanners (fingerprint, iris, or palm) are non-negotiable. You can't lose or steal a fingerprint like you can a keycard, providing a much stronger guarantee of identity.

- Continuous Surveillance: You need high-definition cameras monitoring all critical areas 24/7. This isn't just about recording; it's about active monitoring. On-site security personnel must be ready to respond to alerts the second they happen.

I’ve seen projects where millions were spent on the data hall door, but the loading dock was secured with a standard lock. An attacker will always find the weakest link—don't let it be an unmonitored fence line or a back entrance you forgot about.

Designing a Scalable Network Topology

The structured cabling is the literal bedrock of your data center's performance. You can’t just plan for today’s bandwidth needs; you have to anticipate the exponential growth of tomorrow. This requires a meticulous approach to designing a network topology that is both resilient and easy to scale.

Your cabling plan has to be documented with almost obsessive precision. I mean every single connection, from the carrier handoff in the "meet-me room" to the top-of-rack switch in row 42. This documentation, often kept in a detailed cabling matrix, is your bible for installation, troubleshooting, and any future upgrades.

For any modern hyperscale or AI-focused build, fiber optic cabling is the only real choice. The specific type of fiber (single-mode for distance, multi-mode for shorter runs) and connector (like LC or MPO) will depend on your exact needs. High-density racks, for instance, are increasingly using MPO connectors to bundle many fiber strands, which is how you support insane speeds of 400G and beyond. If you’re building from the ground up, looking into turnkey network solutions can save a lot of headaches by bundling the design, procurement, and installation.

Ensuring Uptime with Carrier Neutrality

Finally, your data center’s connection to the outside world is its lifeline. If you rely on a single internet service provider, you’ve created a massive single point of failure. One stray backhoe cutting a fiber optic cable miles away could knock your entire facility offline.

The solution is carrier neutrality. It’s a simple but powerful principle: bring in redundant connections from multiple, diverse network providers. When one carrier has an outage, your traffic automatically fails over to another, keeping you online.

For this to work, you need true physical diversity. The carriers' lines should enter your building at different points and follow completely separate geographic paths. This is how you build an infrastructure that can withstand real-world failures and meet the tough service level agreements (SLAs) your customers demand.

Mastering the Commissioning and Handover Process

After all the planning, permitting, and construction, your data center is finally standing. But don't start moving in servers just yet. Before the facility can go live, it has to survive the final trial by fire: commissioning.

This isn't a simple flick of the switch. It's an incredibly thorough, multi-stage process designed to prove that every single system—from the massive generators down to the smallest circuit breaker—performs exactly as intended under real-world stress. I’ve seen projects stumble at this final hurdle, and I can tell you that cutting corners here is a direct path to a catastrophic outage later. The entire goal is to find and fix every hidden flaw before a single customer workload enters the building.

The Five Levels of Commissioning Explained

Commissioning isn't a single event; it's a carefully orchestrated sequence of tests that build on each other. We break it down into five distinct levels, moving from inspecting individual parts to stress-testing the entire, fully integrated facility.

It all starts well before the heavy equipment even arrives on your property.

-

Level 1: Factory Acceptance Testing (FAT)

Your team quite literally goes to the factory. Whether it's for your generators, UPS systems, or large-scale switchgear, you'll witness the manufacturer run the equipment through its paces. This is your first chance to confirm it meets your exact specifications at the source. -

Level 2: Site Inspection and Verification

Once the crates are opened on-site, it’s all about verification. You’re meticulously checking that you received the correct models, that nothing was damaged in transit, and that every piece is installed in precisely the right location according to the design documents. These early checks prevent major headaches down the line.

Ramping Up to Integrated Systems Testing

With the foundational checks complete, the testing intensity increases dramatically. This is where we shift from verifying components to simulating real-world operations and, more importantly, failures.

Level 3 is where we start powering things up. This phase focuses on starting and testing each piece of equipment in isolation. Technicians will fire up the generators, energize the switchgear, and run diagnostics on individual Computer Room Air Conditioning (CRAC) units. It's a systematic process to ensure every component works correctly on its own.

From there, we move to Level 4, the integrated systems test. This is where the magic happens. Instead of just starting one generator, we'll simulate a full utility power failure. Do all the backup generators start automatically? Do they synchronize with each other? Can they seamlessly accept the entire building load? This is where you find out if your redundant systems actually work together as a cohesive unit.

A single generator might pass its solo test with flying colors, but a subtle flaw in the control logic could prevent it from syncing correctly during an actual outage. Level 4 is designed specifically to hunt for those interactive failures in a controlled environment, not during a real emergency.

The final boss of commissioning is Level 5: Integrated Systems Testing (IST). This is the ultimate endurance run. Using massive load banks to simulate the full electrical and thermal load of a packed data center, we push the facility to its absolute limits for an extended period—often 24 to 72 hours straight. During the IST, we'll intentionally trigger multiple, cascading failures to see how the entire ecosystem responds under maximum duress.

The table below breaks down these five critical stages.

The Five Levels of Data Center Commissioning

This commissioning framework is the industry standard for ensuring a new data center is truly ready for production. Each level builds on the success of the previous one, culminating in a fully-vetted facility.

| Commissioning Level | Objective | Example Activities |

|---|---|---|

| Level 1: FAT | Verify equipment meets specs at the factory. | Witnessing a generator load test at the manufacturer's plant. |

| Level 2: Site Verification | Confirm correct and undamaged equipment is installed. | Visually inspecting switchgear for shipping damage and proper placement. |

| Level 3: Component Startup | Test individual equipment functionality in isolation. | Powering on a single CRAC unit and verifying its performance. |

| Level 4: Systems Integration | Test how redundant systems work together. | Simulating a utility outage to test generator and UPS failover. |

| Level 5: IST | Validate total facility performance under full load. | Running the data center on load banks for 48 hours while simulating faults. |

Passing all five levels is the green light that signifies your data center is robust, resilient, and ready for its operational life.

While you're validating the infrastructure's resilience through commissioning, it's crucial to remember the physical security that protects it. The layered defense model shown above—from the perimeter fence to the rack itself—is just as critical as the power and cooling systems you're testing.

Executing a Smooth Operational Handover

Once your facility has passed its commissioning trials, the final step is handing the keys to the operations team. A poorly managed handover can undermine months of hard work. A successful transition is built on three pillars: documentation, training, and tools.

First, meticulous documentation is non-negotiable. Your ops team needs a complete library of as-built drawings, all equipment manuals, and the full commissioning test report binder. This library becomes their bible for day-to-day work and future troubleshooting.

Next, you need to arm them with clear Standard Operating Procedures (SOPs) for routine tasks and Emergency Operating Procedures (EOPs) for crisis response. These guides provide vetted, step-by-step instructions for everything from performing monthly generator maintenance to responding to a chiller plant failure.

Finally, a powerful Data Center Infrastructure Management (DCIM) platform is essential. It gives the team real-time visibility into power, cooling, and capacity, turning a flood of raw data into the actionable insights they need to maintain uptime and operate efficiently from day one.

Common Questions About Building a Data Center

Even with the most detailed blueprint in hand, building a data center from the ground up naturally brings a lot of questions to the surface. It's a massive undertaking, both in terms of capital and complexity, so it’s only right that stakeholders want clear answers to the most pressing concerns. Getting these conversations started early helps everyone align on expectations and take a lot of the risk out of the project right from the beginning.

Here are some of the questions I hear most often from clients as they get started, along with some straightforward insights from years in the field.

How Long Does It Take to Build a Data Center?

This is always the first question, and the honest answer is: it depends. The timeline can shift dramatically based on the scale of the build, the specific location, and the intricacy of your design.

For a standard enterprise or colocation facility, you should be prepared for a 12 to 24-month journey. That's from the very first site survey all the way to flipping the switch at final commissioning.

Several major phases drive that schedule, and each one has its own potential to create delays.

- Site Selection & Acquisition: Finding and securing the right piece of land is foundational. This can take anywhere from 3 to 6 months, and that's assuming a suitable site is even available.

- Permitting & Zoning: This is, without a doubt, the biggest wildcard. Navigating the maze of local government approvals can easily eat up 6 to 12 months—and in some places, much longer. It’s a common source of frustration.

- Design Phase: The hard work of turning your operational needs into detailed architectural and engineering documents usually takes a solid 3 to 5 months.

- Construction: The physical build itself, from pouring concrete to installing racks, will typically last between 9 and 18 months, depending on the facility's size.

And don't forget the supply chain. Critical equipment with long lead times, like generators or custom switchgear, can take over a year to be delivered. If you don't get those orders in early, your entire project schedule can be thrown off track.

What Are the Biggest Risks in a Data Center Construction Project?

Knowing where things can go wrong is the first step to making sure they don't. From my experience, the major risks in any data center build consistently fall into three buckets: budget, schedule, and performance.

Budget overruns are an ever-present concern. They’re often triggered by things you can't see, like discovering poor soil quality during excavation. But they can also come from sudden spikes in material costs or scope creep when design changes are made mid-project.

Schedule delays are just as common. As I mentioned, the permitting process is a frequent culprit, but so are skilled labor shortages or those nagging supply chain issues for critical components.

The most dangerous risk, though, is a performance failure. This is when the finished facility just doesn't meet its design goals during commissioning. Missing your Power Usage Effectiveness (PUE) target or failing to achieve the Tier certification you promised your customers can have devastating financial and operational fallout.

The best defense against these risks is always solid project management, bringing in experienced partners, and having detailed contingency plans ready to go.

What Is the Difference Between Tier III and Tier IV Data Centers?

The Uptime Institute's Tier standards are the industry's go-to benchmark for reliability, and the choice between Tier III and Tier IV is a constant topic during the design phase. It really boils down to two things: your business's tolerance for downtime and your budget.

A Tier III data center is designed to be 'Concurrently Maintainable.'

In simple terms, this means any single piece of power or cooling equipment can be taken offline for maintenance or repair without disrupting your IT operations. It pulls this off with N+1 redundancy and guarantees 99.982% uptime—which works out to a maximum of 1.6 hours of downtime per year.

A Tier IV data center is a whole other level. It’s built for 'Fault Tolerance.'

This design uses 2N or even 2N+1 fully redundant systems, creating completely separate and isolated power and cooling paths. It's engineered to withstand any single, unplanned equipment failure without affecting the critical IT load at all. This gets you 99.995% uptime, or just 26.3 minutes of potential downtime annually.

Ultimately, the right choice depends entirely on how critical your applications are. A Tier IV facility offers the highest possible resilience, but it comes at a significantly higher cost, both in the initial build and in long-term operational expenses.

Building a reliable data center requires a partner with deep expertise in every phase of infrastructure deployment. From site preparation to integrating complex power and network systems, Southern Tier Resources delivers the end-to-end construction and engineering services needed to bring your vision to life.

Discover how our turnkey solutions can ensure your next data center project is delivered on time, within budget, and to the highest standards of quality. Learn more at https://southerntierresources.com.