Data center connectivity is, quite simply, how data gets around. It’s the web of physical and logical connections that lets information flow between servers, storage systems, and networks inside a data center—and, just as importantly, connects that data center to the rest of the world.

Think of these connections as the essential arteries of our digital economy, responsible for moving everything from your favorite streaming show to complex AI computations.

The Unseen Engine of Our Digital World

Every cloud service we use, every video we stream, and every AI-powered search we run is powered by an engine we never see: data center connectivity. It's helpful to picture a data center not as a single building, but as a buzzing digital metropolis. The intricate network of fiber optic cables and high-end hardware inside forms its system of superhighways, railways, and local roads, all engineered to move data at blistering speeds.

This digital city has a few key residents, and each one has very different traffic needs:

- Hyperscalers: These are the giants running the major cloud platforms. They need continent-spanning superhighways to link their massive, distributed infrastructure.

- Carriers and ISPs: Think of them as the internet's logistics companies. Their focus is on building efficient routes to deliver data to millions of homes and businesses.

- Enterprises: These are businesses that depend on private, highly secure data transport for their most critical applications, whether it's for financial trading or managing sensitive healthcare records.

The High Stakes of Digital Logistics

The challenges for these players are enormous. Even a millisecond of delay—what we call latency—can throw off financial markets or make a cloud application feel sluggish. Not having enough capacity creates digital traffic jams, grinding business operations to a halt.

In this environment, data center connectivity solutions are no longer just an IT issue. They are a core business asset that directly impacts performance, the ability to grow, and a company's competitive edge.

To really get a handle on the scale of this, it helps to start by understanding network infrastructure, which truly is the backbone of any modern operation. The demand for bigger and faster infrastructure is absolutely exploding.

In 2023, global data center construction hit an all-time high, with 3,077.8 megawatts (MW) of capacity being built. That’s a massive 46% year-over-year increase, fueled almost entirely by the insatiable demand for high-speed connections for AI and cloud computing. The US alone, home to over 5,426 data centers, saw vacancy rates plummet to a record low of 2.8%.

This incredible growth puts a spotlight on just how critical it is to have experienced partners on the ground to prevent connectivity from becoming a bottleneck. Building the physical arteries that keep the digital world moving—from meticulously laid fiber pathways to complete data center fit-outs—demands real-world expertise.

This is exactly where turnkey infrastructure partners like Southern Tier Resources come in, making sure the foundational connections are solid enough to support whatever comes next. You can dig into more of the latest data center statistics over on Brightlio.com.

Your Guide to Core Data Center Connectivity Options

Choosing the right data center connectivity is a lot like picking the right shipping method for critical cargo. You wouldn't use a bicycle courier for a coast-to-coast delivery, and you wouldn't charter a cargo plane to send a package across the street. Each connectivity option is a specialized tool, and knowing which one to use is the key to building a network that's both high-performing and cost-effective.

These solutions are the literal lifelines connecting your infrastructure to partners, clouds, and customers. Let's break down the main players, using some straightforward analogies to see how they really work and where they shine.

Dark Fiber: The Private Superhighway

Imagine having exclusive access to a brand-new, perfectly paved superhighway. It’s completely empty—no other cars, no traffic lights, and you set the speed limit. You decide what vehicles to use, how fast they go, and which lanes they travel in.

That’s dark fiber. It’s simply raw, unlit fiber-optic cable that an organization leases or buys outright. This gives you near-limitless bandwidth and absolute control over your network equipment and protocols.

- Who uses it? Hyperscalers, major financial institutions, and large carriers—anyone with massive, predictable data transfer needs between critical locations.

- Why choose it? For the ultimate in control, security, and long-term scalability. It’s a capital-intensive model (CapEx), but at a massive scale, it can be incredibly cost-effective.

The trade-off, of course, is responsibility. You’re in charge of "lighting" the fiber with your own optical equipment and managing the entire network from end to end. This demands significant in-house expertise, which is why many organizations turn to partners for comprehensive fiber network services that handle the heavy lifting of engineering and installation.

Lit Services: The Managed Express Lane

Now, picture that same highway again. Instead of leasing the whole road, you’re buying access to a dedicated, fully managed express lane. The highway operator provides the vehicles (network equipment), sets a guaranteed speed (bandwidth), and ensures your cargo arrives on time, every time.

This is a lit service. A carrier provides a ready-to-use connection with a specific, guaranteed bandwidth—like 10 Gbps or 100 Gbps—over their existing network. This is often sold as a wavelength service or a dedicated internet access (DIA) circuit.

A lit service is essentially bandwidth-as-a-service. It shifts the burden of network management to the provider and offers a predictable operational expense (OpEx) model. This makes it incredibly attractive for businesses needing high performance without the headache of managing their own optical gear.

This option is perfect for enterprises connecting to the cloud, linking corporate offices, or needing a rock-solid internet connection backed by a strong Service Level Agreement (SLA).

Ethernet Services: The Versatile City Grid

Think of Ethernet services as the intricate grid of local streets and arterial roads in a major city. This network is incredibly flexible, letting you create multiple routes of different sizes to connect various buildings and districts. It’s built for efficiently handling everyday traffic that needs to reach many different destinations.

Ethernet services offer scalable and highly flexible connectivity, making it simple to link multiple sites in a point-to-multipoint or any-to-any setup. As a Layer 2 service, it’s far simpler to manage than routing-heavy Layer 3 networks and offers bandwidth from just a few megabits all the way up to multiple gigabits. It’s a workhorse for connecting enterprise branches to a central data center or creating a private network between business locations.

Cross-Connects: The Direct On-Ramp

A cross-connect is the most direct connection of all. Imagine two businesses in the same industrial park. A cross-connect is the physical cable running directly between their buildings—a private on-ramp that bypasses all public roads.

Inside a data center, a cross-connect is that physical fiber or copper cable linking your equipment directly to a partner, carrier, or cloud provider in the same facility. Because the data travels just a few hundred feet, these connections are prized for their extremely low latency, high security, and unmatched reliability.

This is the very technology that makes carrier-neutral data centers and their "meet-me rooms" so valuable. They create these dense ecosystems where hundreds of networks can directly interconnect with maximum speed and efficiency.

Comparing Data Center Connectivity Solutions

To bring it all together, here’s a quick comparison of these core connectivity solutions. Each one solves a different problem, and understanding their strengths will help you architect the right network for your needs.

| Solution Type | Description & Analogy | Primary Use Case | Control & Management |

|---|---|---|---|

| Dark Fiber | The Private Superhighway. You lease the raw infrastructure and manage everything yourself. | Point-to-point connections with massive, predictable bandwidth needs (e.g., data center to data center). | Full Control. You are responsible for all optical equipment and network management. |

| Lit Services | The Managed Express Lane. You buy a specific amount of bandwidth from a provider. | High-capacity, reliable connections with guaranteed performance (SLAs) for cloud access or internet. | Provider Managed. The carrier handles the network; you just use the service. |

| Ethernet | The Versatile City Grid. Flexible, scalable connectivity for linking multiple sites together. | Building private WANs, connecting branch offices to a data center, or multi-cloud connectivity. | Shared Control. You manage your endpoints, but the underlying network is provider-managed. |

| Cross-Connect | The Direct On-Ramp. A direct physical cable linking two entities within the same data center. | Ultra-low latency connections to partners, clouds, or network providers in the same facility. | Direct Connection. No third-party management; it's a simple, physical link. |

Ultimately, most modern data center strategies don’t rely on a single option. Instead, they blend these solutions—using dark fiber for the backbone, lit services for cloud on-ramps, and cross-connects for critical partner ecosystems—to create a resilient, high-performance, and cost-effective architecture.

Architecting for Unbreakable Network Performance

Choosing the right data center connectivity solution is just the start. The real magic happens when you arrange those connections into a resilient, high-performance architecture. It's one thing to have a fast connection; it's another entirely to have a network that can shrug off unexpected failures, grow with you, and deliver rock-solid performance for the applications that matter most. This means thinking beyond individual components and designing a holistic system with no single point of failure.

Think of your main data center connection like a single highway into a bustling city. If that highway shuts down for repairs or an accident, everything grinds to a halt. A truly resilient architecture builds multiple, physically separate highways on different routes. No matter what happens to one, traffic keeps flowing. This is the simple but powerful idea behind diversity and redundancy.

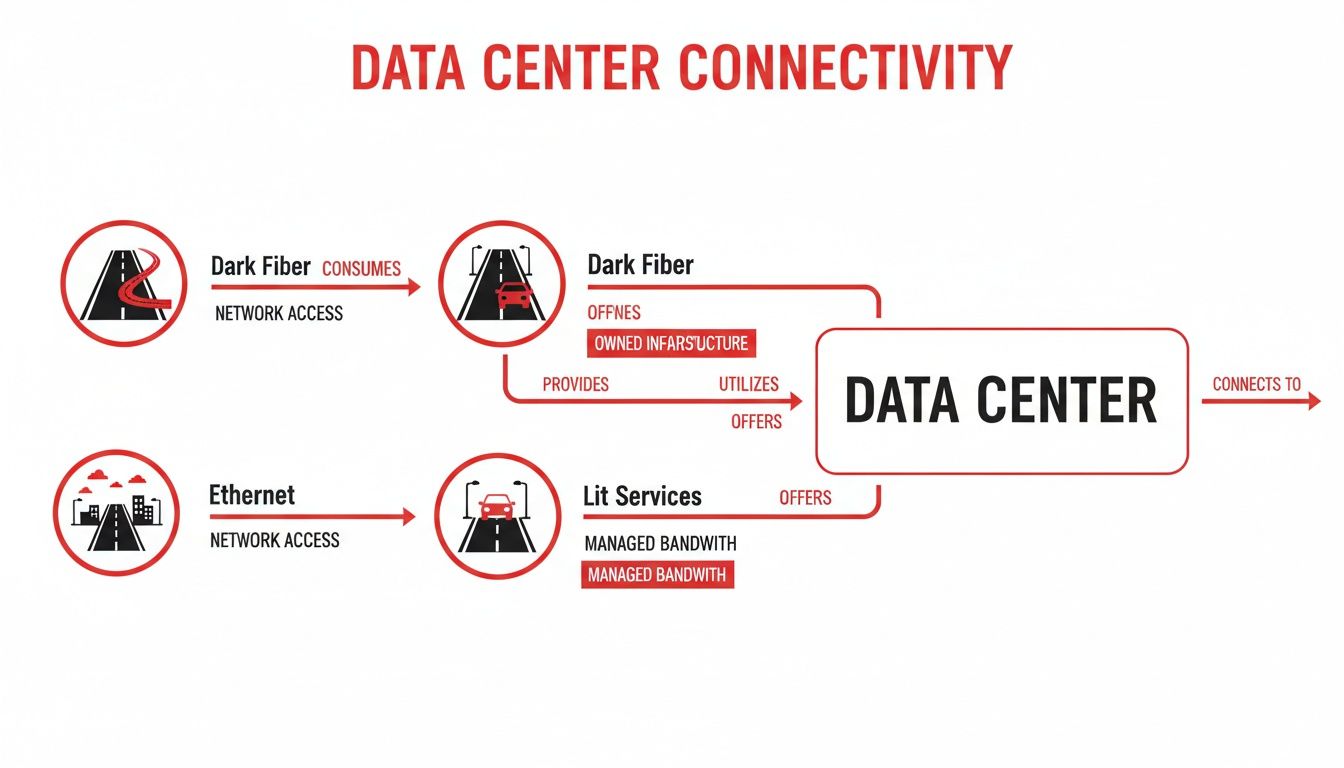

This flowchart breaks down how different connectivity options become the building blocks for these robust network designs.

You can see how options like Dark Fiber, Lit Services, and Ethernet act as different kinds of pathways—from private superhighways to managed toll roads—that can be woven together to build a network that just won't quit.

Building Resilience with Redundancy Models

In network engineering, redundancy isn't about excess; it's about insurance. It’s the guarantee that a backup system is hot and ready to take over the instant a primary component falters. It's the same principle as having backup generators for a hospital—you hope you never use them, but they are absolutely critical for continuity when the main power grid goes down.

Two of the most common models you'll encounter are:

- N+1 Redundancy: This approach adds one extra component ("+1") for the number you need to operate ("N"). If your workload requires three routers, an N+1 design includes a fourth. Should one of the primary three fail, the fourth one jumps into action.

- 2N Redundancy: This is a fully mirrored, or duplicated, system. For every piece of active equipment, there's an identical, active backup ready to go. While it costs more, this model provides the highest level of availability, making it the standard for systems where even a second of downtime is too long.

But deploying these models is more than just buying extra hardware. It demands careful planning of the physical fiber routes to achieve true diversity. You can dive deeper into this strategic process in our guide to fiber optic network design.

The Meet-Me Room: The Central Hub of Connectivity

Deep inside any carrier-neutral data center lies its nerve center: the Meet-Me Room (MMR). This highly secure, shared space is basically a stock exchange for data. It's the physical point where hundreds of different networks—ISPs, cloud providers, content delivery networks (CDNs), and enterprises—come together to exchange traffic directly.

The MMR is the physical marketplace where interconnection happens. A single cross-connect within the MMR can link your infrastructure directly to a global cloud provider or a key business partner, creating an ultra-low latency connection that bypasses the public internet entirely.

A data center with a vibrant, carrier-rich MMR offers a massive strategic advantage. It creates a competitive ecosystem that helps control costs, boost network performance, and gives you the agility to add or switch providers without a major overhaul. It’s the engine that powers the modern digital supply chain.

By pairing redundant architectural models with smart interconnections in an MMR, you build a network that isn't just fast—it's fundamentally unbreakable. This foresight ensures your infrastructure can handle your ambition, scaling as you grow while insulating you from the inevitable bumps in the road. Turning these architectural blueprints into reality requires a partner skilled in the meticulous build-out of diverse pathways and structured cabling systems.

Making the Right Connectivity Choice

Choosing the right data center connectivity isn't just a technical decision; it's a business one. It’s a constant balancing act between cost, performance, and how much control you want to maintain. You're not just buying a circuit; you're building the foundation that your entire operation will run on.

To get it right, you have to look past the spec sheets and think about the real-world impact. That millisecond of latency could be the difference in a successful financial trade. The physical path a fiber cable takes could determine if you stay online during a local outage. Every detail matters.

Balancing Latency and Bandwidth

People often lump latency and bandwidth together, but they solve completely different problems. Getting this right is fundamental to building a network that actually meets your needs.

Latency is all about speed—the round-trip time for a single packet of data. For some applications, this is everything. Think about high-frequency trading, where a few milliseconds can mean millions of dollars won or lost. The same goes for real-time AI inference, where instant responses are critical for a good user experience.

Bandwidth is about capacity. Think of it like a highway: latency is how fast a single car can get from A to B, but bandwidth is how many lanes the highway has. This is your hedge against the future. With data volumes exploding, you need to ensure your network won't grind to a halt during peak hours or as your business grows.

Defining Your Performance Guarantees with SLAs

A Service Level Agreement (SLA) is much more than a support contract—it’s your performance guarantee, written in stone. This document is where your provider legally commits to specific, measurable metrics that define the quality of your service.

A solid SLA should spell out:

- Availability: The promised uptime, usually shown as a percentage like 99.99%.

- Packet Delivery: The percentage of data packets that successfully make it to their destination.

- Latency: The maximum guaranteed round-trip time between specific points.

- Jitter: The acceptable variation in latency, which is absolutely critical for voice, video, and other real-time streams.

Your SLA is your main tool for holding providers accountable. It sets clear expectations and outlines the consequences—usually service credits—if they don't deliver. Don't just skim it; read the fine print.

Understanding the True Cost of Connectivity

The price tag on connectivity is rarely the full story. To understand the real financial impact, you have to look at the Total Cost of Ownership (TCO), which includes everything from the initial buildout to ongoing operational costs. This usually boils down to a classic CapEx vs. OpEx decision.

Capital Expenditures (CapEx) are your upfront investments. When you buy dark fiber or your own switching hardware, that's a CapEx play. It gives you more control and can be cheaper in the long run, but it requires a hefty initial investment and the in-house team to manage it.

Operational Expenditures (OpEx) are the recurring, pay-as-you-go costs. Think lit services or fully managed network solutions. This model offers predictable monthly bills and offloads the management headaches to your provider, though it might cost more over the life of the service.

Overlooking Security and Permitting at Your Peril

Two of the most common—and most costly—mistakes are overlooking physical security and underestimating the nightmare of permitting. You can have the most advanced network in the world, but if the fiber vault is unlocked or a single backhoe can take out your only two routes, you're exposed.

Then there's the bureaucracy. Securing rights-of-way, navigating local construction codes, and dealing with environmental regulations can stall a project for months and blow the budget wide open. This is where having the right partner becomes a game-changer.

An experienced infrastructure partner who knows the local landscape and has the right relationships can handle this entire process for you. They turn what could be a project-killing bottleneck into just another box to check on the way to a successful deployment.

From Blueprint to Reality: A Deployment Guide

A brilliant network design is just that—a design. Its true value only comes to life through flawless execution. Taking that architectural plan and turning it into a high-performance physical network is where meticulous engineering meets skilled, hands-on craftsmanship. This is the critical phase where strategic vision becomes a reliable, manageable, and scalable reality.

The journey from a drawing to a fully lit circuit is a multi-step process that starts long before a single cable is pulled. It all begins with comprehensive site surveys and pathway engineering. You have to walk the routes, see the terrain, and identify every potential obstacle to ensure the infrastructure you’ve planned can actually be built safely and efficiently.

This initial diligence is what prevents those costly surprises and frustrating delays down the line. It's the foundational work that underpins the entire project's success.

The Craftsmanship of Fiber Installation

Once the pathways are confirmed, the work shifts to the precise, hands-on task of installation. This is so much more than just laying cable. Fiber splicing and termination are highly specialized skills that require an almost artistic level of precision to create connections that minimize signal loss and maximize performance.

Think about it: a single, poorly executed splice can degrade an entire high-capacity link. This is where the quality of your installation team directly impacts the long-term reliability of the network. Each connection has to be nearly perfect to handle the immense data volumes modern data centers push through them every second.

After the physical connections are made, the network has to be put through its paces. This isn't a simple "on/off" check; it's a deep diagnostic process using specialized tools to certify the network's health and prove it meets industry standards.

The testing phase is non-negotiable. It is the only way to prove that the network built matches the network designed. It provides the baseline performance data that will be essential for troubleshooting and maintenance for years to come.

A few key tests are absolutely essential:

- Optical Time-Domain Reflectometer (OTDR) Testing: This acts like radar for the fiber optic cable. By sending a pulse of light down the line, it can precisely locate splices, connectors, and any potential faults or breaks, giving you a detailed map of the cable's physical integrity.

- Power Metering: This test measures the exact amount of light power reaching the end of the circuit. It confirms whether the signal loss across the entire link—what we call the "loss budget"—is within the acceptable limits defined during the design phase.

This verification is the final gate before handing over the keys, guaranteeing the network is truly ready for service.

Sustaining Performance Through Proactive Operations

With the network live, the focus shifts from deployment to long-term operational excellence. A "set it and forget it" approach is a recipe for disaster. Keeping that network at peak performance requires a proactive strategy built on diligent monitoring, scheduled maintenance, and excellent documentation.

This operational phase is where turnkey network solutions truly shine, as a dedicated partner can manage the entire lifecycle from construction to ongoing support. When planning a deployment or upgrade, a detailed data center migration checklist can also be an invaluable resource for navigating the complexities involved.

A robust operational plan always includes these core components:

- Proactive Monitoring: Implementing systems to constantly watch over network health, flagging potential issues like signal degradation before they can cause an outage.

- Preventative Maintenance: Sticking to a schedule for routine tasks, like cleaning connectors and re-testing key fiber links, to ensure the infrastructure stays in top condition.

- As-Built Documentation: This is the network’s definitive architectural record. It's a detailed set of documents and diagrams showing exactly how the network was built, including precise cable routes, splice locations, and initial test results. This documentation is gold for future upgrades, troubleshooting, and rapid disaster recovery.

Ultimately, a successful deployment isn't just about the initial build. It’s about the combination of skilled installation, certified testing, and meticulous documentation that ensures your network is reliable on day one and easy to manage for its entire lifecycle.

Your Connectivity Questions, Answered

As you map out your data center strategy, you're bound to run into some confusing terminology. Getting the concepts right isn't just an academic exercise—it's crucial for making smart architectural decisions that will support your business for years to come.

Let's clear up some of the most common questions we hear from operators every day.

What’s the Real Difference Between a Cross-Connect and an Interconnect?

This is easily one of the most frequent points of confusion, but it helps to think of it as the difference between a strategy and a tool.

Interconnection is the big-picture strategy. It’s the entire ecosystem within a data center that allows hundreds of different networks—carriers, cloud providers, enterprises, and partners—to connect and exchange traffic with each other. It’s the digital marketplace.

A cross-connect is the physical cable that makes that interconnection happen. It's the specific, point-to-point fiber or copper line you order to link your equipment to another company's gear inside the same building.

So, interconnection is the valuable ecosystem you want to be a part of; the cross-connect is the tangible link you buy to get in the game.

Why Is a Meet-Me Room So Important?

The Meet-Me Room (MMR) is the literal heart of a data center’s connectivity. It’s a highly secure, carrier-neutral space where all the different networks physically come together. Think of it as the central trading floor for data.

Its importance really boils down to three huge advantages:

- Choice and Competition: A packed MMR is like a massive menu of potential partners—ISPs, cloud platforms, SaaS companies, you name it. This level of competition drives down prices and gives you much more flexible terms.

- Raw Performance: By setting up a direct cross-connect in the MMR, you get to bypass the public internet completely. This gives you a private, ultra-low latency, high-bandwidth link to your partners, which can have a massive impact on application performance.

- Agility: Need to add a new provider or switch carriers? In a dense MMR, your next connection is often just a few hundred feet of fiber away. You can get new services turned up in days, not months.

A data center with a vibrant MMR isn't just a place to house servers; it's a powerful strategic asset for building your digital business.

How Does Edge Computing Change Connectivity Needs?

Edge computing doesn't get rid of the need for big, centralized data centers. In fact, it makes the connections between the "core" and the "edge" more critical than ever.

The whole point of the edge is to move some processing and storage closer to users to cut down on lag. But all that data eventually has to flow back to a central data center for heavy-duty analytics, long-term storage, and management.

Edge computing creates a massive new demand for high-capacity, low-latency "mid-mile" networks. These are the digital superhighways that shuttle immense volumes of data between thousands of local edge sites and the central cloud or enterprise data centers.

This means that high-performance solutions like dark fiber and dedicated wavelength services are no longer a luxury; they're essential for building the robust backhaul infrastructure the edge requires. The rise of the edge only amplifies the need for a rock-solid data center connectivity strategy.

When Should I Choose Dark Fiber Instead of a Lit Service?

This is the classic "build vs. buy" debate in the networking world. The right answer really comes down to your need for control, your scale, and the expertise you have in-house.

You should seriously consider dark fiber when your organization needs:

- Absolute Control: You want to pick, manage, and upgrade your own optical gear on your own timeline, without waiting for a provider.

- Massive Scalability: Your bandwidth needs are enormous and growing. With dark fiber, you can scale capacity to hundreds of gigs or even terabits just by upgrading the equipment on each end.

- Predictable Costs at Scale: You'd rather have a one-time capital expense (CapEx) that you can sweat for years, which often becomes far cheaper than leasing lit services over the long haul.

On the other hand, a lit service is probably the better fit when your priorities are:

- Managed Simplicity: You just need a reliable connection with a solid Service Level Agreement (SLA). You want the provider to handle all the network management and troubleshooting.

- Predictable Operational Costs: You prefer a recurring monthly bill (OpEx) that fits cleanly into your budget without a huge upfront investment.

- Speed to Market: You need to get a high-capacity circuit turned up fast, without the months-long lead times that can come with a new fiber build.

For most enterprises, a lit service is the most practical and efficient choice. For hyperscalers, major financial institutions, and network carriers, dark fiber is often the fundamental building block of their entire network strategy.

Navigating these choices and then executing the physical build-out requires deep, hands-on expertise. From engineering diverse fiber pathways to fitting out a data center with resilient structured cabling, Southern Tier Resources is the turnkey infrastructure partner that turns your connectivity blueprint into a reliable reality. Find out how we deliver end-to-end network solutions.