Data center power is the unsung hero of the digital age. It's the critical infrastructure that takes raw electricity from the utility grid and safely delivers clean, continuous power to every server, switch, and storage array. This unseen engine is what makes every click, stream, and transaction possible, forming the very foundation of digital reliability.

The Unseen Engine of the Digital World

Every time you access a cloud service or send an email, you're relying on a complex, invisible network that ensures data center hardware receives stable, uninterrupted power. It’s the circulatory system for the entire digital economy—a carefully engineered path that electricity travels from a local substation all the way to an individual server rack. If that path fails, the digital services we depend on simply go dark.

A great way to picture this is to think of a city's water supply. The utility grid is like the main reservoir, holding a massive amount of raw energy. That energy first flows into the data center's "treatment plant"—its Uninterruptible Power Supply (UPS) and switchgear—where the power is cleaned, stabilized, and conditioned. From there, it's channeled through the facility's main electrical conduits, much like giant water mains, before being distributed to the server racks, which are like the faucets in every home.

Why a Resilient Power Chain Is Non-Negotiable

Even a momentary disruption in this flow can trigger a cascade of failures, leading to millions in lost revenue, data corruption, and damaged customer trust. That's why building a resilient power chain isn't just a best practice; it's non-negotiable for any modern operation.

This is especially true for:

- Hyperscale Cloud Providers: These massive facilities power global services and need a colossal, constant feed of reliable electricity to support millions of users at once.

- Telecom Carriers: Network operations centers and critical cell sites depend on flawless power to keep our communication lines open 24/7.

- Enterprise Data Centers: Businesses in finance, healthcare, and logistics run applications where even a second of downtime is completely unacceptable.

The incredible power demands of new technologies like AI and machine learning have pushed this infrastructure to its limits. Training a single large-scale AI model can consume megawatts of power, making both efficiency and absolute reliability more critical than they have ever been.

A robust power distribution system isn't just about keeping the lights on; it's about guaranteeing the operational continuity that underpins the global economy. It's the physical foundation for a digital world.

This guide will walk you through the essential components, redundancy models, and strategic planning needed to build a power backbone that’s ready for today's demands and tomorrow's growth.

Tracing Power from the Grid to the Rack

A data center’s power system is a story of transformation. It starts with raw, high-voltage electricity from the local utility and ends with the perfectly stable, clean power that sensitive IT gear needs to run flawlessly. This path is anything but simple; it’s a chain of highly specialized equipment, where each component plays a critical role in guaranteeing uptime and protecting millions of dollars in hardware.

To truly appreciate how a data center achieves near-perfect reliability, you have to follow the flow of power from start to finish.

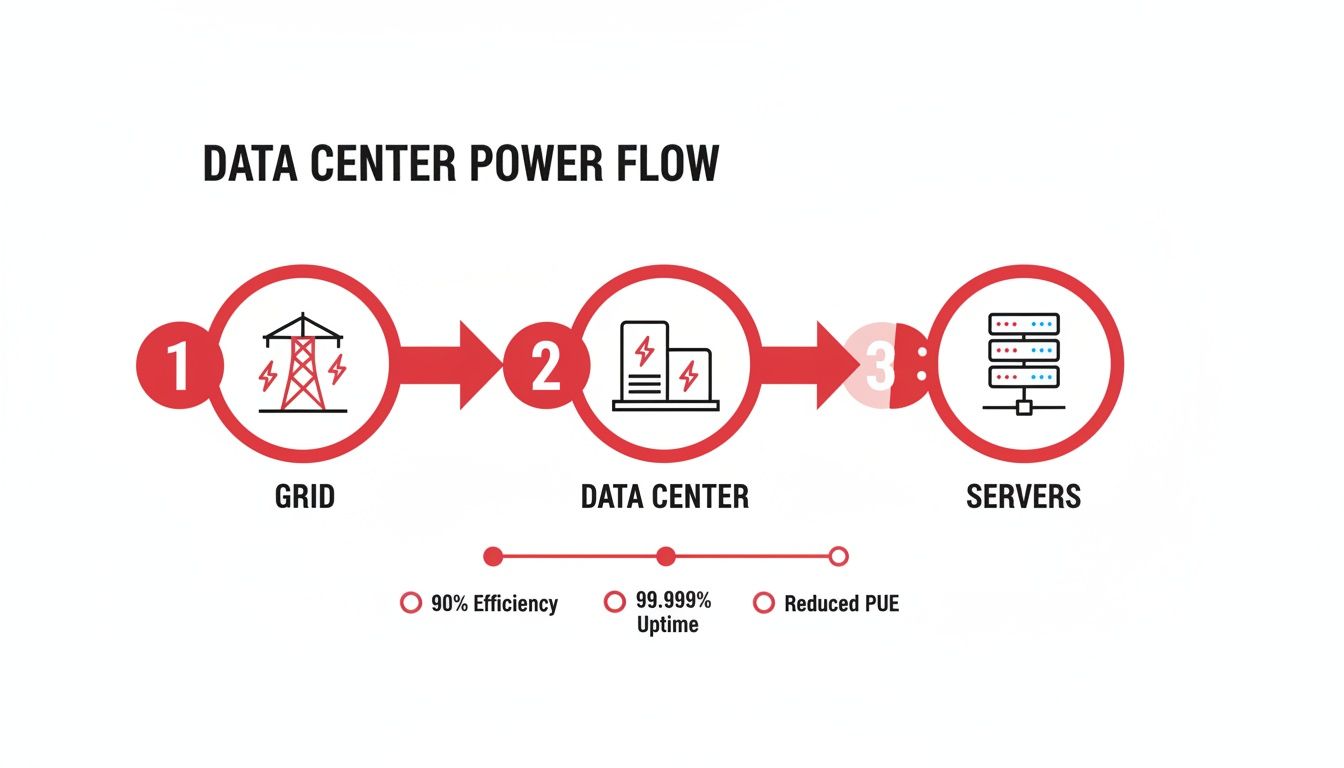

This infographic gives you a bird's-eye view of that journey, from the moment electricity enters the building to when it powers up a server.

While the diagram simplifies a complex process, it highlights the essential stages of stepping down, conditioning, and distributing power to ensure nothing ever goes dark. Now, let’s unpack each step in this mission-critical power chain.

From Utility Feed to Usable Voltage

The journey begins at the utility connection, where massive amounts of power arrive at a very high voltage. The first step is to tame it. This is handled by step-down power transformers, which convert the utility’s high-voltage feed into a lower, more manageable voltage for the facility's internal systems.

From the transformers, that power flows into the main switchgear. Think of this as the master electrical panel for the entire data center. This assembly of heavy-duty switches, fuses, and circuit breakers is the central hub for directing and protecting the main power feed, allowing operators to safely control where electricity goes, isolate circuits for maintenance, and prevent catastrophic failures.

The First Line of Defense Against Outages

So, what happens when the utility grid fails? The first piece of equipment to react is the Automatic Transfer Switch (ATS). The ATS is an incredibly fast and intelligent gatekeeper. Its one and only job is to constantly monitor the incoming utility power.

The instant it senses a blackout or even a serious dip in quality, it automatically flips the data center’s entire electrical load over to a secondary source—almost always the backup generators. This switchover is a matter of milliseconds, so fast that the IT equipment never even knows it happened.

Of course, the ATS needs somewhere to transfer that load. That's where the backup generator comes in. These are powerful diesel or natural gas engines designed to take over the facility's entire power needs during an outage. While the ATS makes the switch, the generator provides the long-term endurance, capable of running for days if needed to ride out extended utility failures.

Conditioning and Instantaneous Backup Power

Before electricity can reach the servers, it needs to be perfected. That’s the job of the Uninterruptible Power Supply (UPS). A UPS is much more than a giant battery; it’s a sophisticated power conditioner that performs two vital functions:

- Power Purification: It constantly cleans up the "dirty" power from the grid, filtering out sags, spikes, and electrical noise to deliver a pure, stable sine-wave output for the IT equipment.

- Instantaneous Backup: Most importantly, it bridges the critical gap—the few seconds between when the utility fails and when the generator gets up to full speed. The UPS batteries provide immediate, seamless power, ensuring zero interruption to the critical load.

Without a UPS, even a momentary flicker from the grid would be enough to crash every server in the data center. It's the ultimate buffer against unpredictability.

The Final Step to the Server Rack

After being cleaned and secured by the UPS, the power is routed to Power Distribution Units (PDUs). The first stop is typically large, floor-mounted PDUs that take the main feed from the UPS and break it down into smaller, more manageable circuits, which are then run to rows of server cabinets.

The very last link in the chain is the rack-mounted PDU. You can think of these as advanced, intelligent power strips. They take the power supplied to the cabinet and distribute it to the individual servers, switches, and storage arrays inside. Modern intelligent PDUs are key to operational visibility, offering per-outlet monitoring so operators can track energy use, manage capacity, and even remotely reboot a single frozen server.

The demand for this kind of robust power infrastructure is skyrocketing. The global data center power market was valued at USD 9.67 billion in 2024 and is projected to hit USD 17.51 billion by 2033. This growth is fueled by the relentless expansion of hyperscale facilities and the intense power demands of AI. Specifically, the market for PDUs and Power Supply Units (PSUs) is set to explode from USD 9.1 billion in 2024 to a staggering USD 73.4 billion by 2035, representing a compound annual growth rate of 21.09%.

Every single component, from the utility transformer to the rack PDU, forms an unbreakable chain designed for 100% uptime. Understanding this flow is fundamental for anyone working in or with modern data centers. To see how this power system fits into the larger picture, you can read our guide on how to build a data center at https://southerntierresources.com/how-to-build-data-center/.

Designing for Uptime with Redundancy and Resilience

In the world of data centers, uptime isn't a happy accident—it's the product of meticulous, intentional design. While individual components like generators and UPS units supply the power, it's the system's architecture that delivers true resilience. The goal is to strategically eliminate single points of failure, ensuring that if one piece of equipment goes down, the IT load keeps running without a hitch.

To understand these architectures, we use a simple shorthand based on the letter "N." Think of N as the absolute minimum capacity needed to power the facility's critical equipment. It's the baseline, with no backup and no room for error.

Starting from that baseline, we can engineer different levels of fault tolerance. Each one strikes a unique balance between cost, complexity, and the level of protection it offers.

The N+1 Model: Adding a Safety Net

The N+1 model is arguably the most common approach you'll find in enterprise data centers. It’s a straightforward concept: take your baseline "N" requirement and add one extra, independent component to the system.

A great analogy is a spare tire. Your car needs four tires (N) to drive, but you carry a fifth one (+1) just in case of a flat. In a data center that needs three UPS units to handle its IT load (where N=3), an N+1 design would have a fourth, identical UPS ready to go. If any of the main three units fail or need maintenance, the fourth one instantly takes over. This gives you solid protection against a single component failure without breaking the bank.

The 2N Model: Complete Redundancy

When even a moment of downtime is unthinkable, the 2N model provides a much higher level of assurance. This topology isn't just about adding a spare part; it's about building two complete, independent, and mirrored power distribution systems. Each system, often called the "A" side and the "B" side, can support the entire critical load on its own.

Think of it as having two identical cars for your daily commute. If one won't start, you just hop in the other and go. In a 2N facility, servers with dual power supplies are plugged into both the "A" power path and the "B" power path. If the entire "A" side fails—from the utility feed all the way down to the PDU—the "B" side is already carrying the load, and nothing skips a beat.

This is the gold standard for financial institutions, major e-commerce sites, and hyperscale cloud providers. While it effectively doubles your spending on electrical gear, it completely eliminates the power chain as a single point of failure.

Comparing Data Center Redundancy Topologies

Choosing the right redundancy model is a critical decision that balances uptime requirements against budget and operational complexity. The following table breaks down the most common topologies to help clarify the trade-offs.

| Topology | Description | Typical Uptime | Best For |

|---|---|---|---|

| N | No Redundancy: Just enough capacity to power the critical load. Any single component failure results in an outage. | 99.749% (Tier I) | Development labs, non-critical systems, or environments where downtime is acceptable. |

| N+1 | Component Redundancy: An extra component (UPS, generator, etc.) is available to take over if one fails. | 99.982% (Tier II) | Most enterprise data centers, small to medium-sized businesses, and applications with moderate uptime needs. |

| 2N | Full System Redundancy: Two completely independent and mirrored power systems, each capable of powering the entire load. | 99.995% (Tier IV) | Mission-critical applications, financial services, healthcare, and hyperscale cloud platforms where downtime is not an option. |

| 2N+1 | System + Component Redundancy: A fully redundant 2N system with an additional backup component on each side. | >99.995% | Extreme-availability environments, government facilities, and organizations with the highest possible risk aversion. |

Ultimately, this comparison highlights that there is no one-size-fits-all solution. The "best" model is the one that aligns with your organization's specific operational needs and risk tolerance.

Advanced Topologies: 2N+1 and Beyond

For the ultimate in resilience, some facilities adopt a 2N+1 design. This approach takes the full mirroring of a 2N system and adds an extra backup component to each side. In essence, it combines the isolation of 2N with the component-level backup of N+1, protecting you from both a full system failure and a subsequent equipment failure on the remaining side.

Making the right choice always comes down to a trade-off. 2N offers near-flawless uptime, but it’s expensive and demands a lot of physical space. An N+1 system is much more cost-effective but leaves you vulnerable if a second component fails while the first is being repaired.

The decision hinges on your business's tolerance for risk and the real-world financial impact of an outage. And remember, this robust power infrastructure is only as good as its physical organization, which is why effective data center cable management solutions are so critical for maintaining airflow and accessibility.

Planning for Growth with Capacity and Load Calculation

Designing a data center's power system is a high-stakes balancing act. Get it wrong, and the consequences are severe. If you overprovision—building way more capacity than you need—you’ll sink millions into capital that just sits there, burning cash on operational inefficiency.

But underprovisioning is the real nightmare. It's a guarantee of future bottlenecks, power-related outages, and eventually, a disruptive and incredibly expensive overhaul. You'll be scrambling to fix the foundation while the business is trying to grow on top of it.

Finding the Sweet Spot with Capacity Planning

This is where effective capacity planning comes in. It’s the discipline of finding that sweet spot between spending too much and not spending enough. This isn't just a one-time calculation; it's a methodical process of figuring out your total power needs, not just for launch day but for years down the road.

This foresight is what separates a truly scalable, future-proof facility from one that will hit a wall in a couple of years. The whole point is to engineer an electrical backbone that can gracefully handle growth. You want to be able to add racks, roll in new servers, and adopt new tech without ever worrying about hitting a power ceiling.

Calculating Power From the Server Up

You can’t size a data center’s power infrastructure from the top down. You have to start at the most fundamental level: the individual server. Everything flows from there.

The approach is almost always bottom-up:

- Start with the Nameplate: Every piece of gear—servers, switches, storage arrays—has a manufacturer's "nameplate" power rating. This number is the absolute maximum it could ever draw, so it’s a conservative, safe place to begin.

- Estimate the Real Load: In the real world, equipment rarely runs at 100% load around the clock. By using actual consumption figures from similar hardware or solid estimates, you get a much more realistic picture of the day-to-day demand.

- Calculate Per-Rack Demand: Add up the power requirements for every device you plan to put in a single rack. This total load per rack is a mission-critical number that dictates everything from rack density to the type of Power Distribution Units (PDUs) you'll need.

- Zoom Out to the Facility Level: Finally, multiply that per-rack load by the total number of racks you plan to install. Don't forget to add in the power for cooling systems, lighting, and other essential building services.

This detailed math gives you the baseline "N" requirement we talked about earlier, which becomes the bedrock for your entire redundancy strategy and power system design.

Accurate load calculation isn't just an engineering exercise; it's a financial necessity. Getting it right prevents overspending on oversized generators and UPS systems while protecting the business from the catastrophic costs of downtime.

Lately, this whole process has gotten much more complicated. The rise of new, incredibly power-hungry technologies means that planning for tomorrow’s workloads is now the most critical part of the equation.

Factoring in Future Growth and AI Workloads

A data center built only for today's needs is already obsolete. A smart capacity plan absolutely must account for future IT hardware refreshes, the trend toward higher rack densities, and the explosive power demands of next-generation computing.

High-performance computing (HPC) and artificial intelligence (AI) workloads have completely torn up the old rulebook. A standard server rack might have drawn 5-10 kW a few years ago. Today, a rack packed with high-density GPUs for AI training can easily pull 50-100 kW—a tenfold increase that puts an unbelievable strain on a facility’s electrical and cooling infrastructure.

This trend is already having a national impact. In 2023, U.S. data centers consumed an estimated 176 TWh of electricity, or 4.4% of the national total. That’s a massive jump from a stable 60 TWh (1.9%) in 2018. After a long period of flat energy use, demand is skyrocketing, driven almost entirely by AI and hyperscale expansion. Some projections show data centers consuming up to 12% of U.S. electricity by 2028. You can read the full research about these energy usage trends to grasp the sheer scale of this challenge.

So, what does a modern, future-ready capacity plan look like? It has to include:

- Scalable Busways: Instead of locking yourself in with traditional under-floor wiring, using overhead busways gives you the flexibility to add or move power drops as rack densities evolve.

- Modular UPS Systems: Why buy a massive UPS on day one? Modular units let you add power capacity incrementally, so your investment keeps pace with actual growth.

- High-Density PDUs: It’s critical to specify rack PDUs that can handle 3-phase power and higher amperages. This is non-negotiable for supporting future AI-ready hardware.

By building for these high-density demands from the start, you design a power system that enables innovation instead of becoming a barrier to it.

Ensuring Power Quality and Intelligent System Monitoring

Getting enough power to your racks is just one part of the equation. The quality of that power is what truly protects the sensitive, high-value IT equipment that runs your business. The raw power coming from the utility grid is rarely clean; it’s often full of sags, swells, and electrical noise that can cause absolute havoc on servers and storage arrays.

These imperfections can lead to everything from corrupted data to outright hardware failure. This is why a well-designed data center power distribution system isn't just a conduit for electricity—it's the final, critical purification stage.

Think of it like the fuel line for a high-performance engine. You wouldn't pump unfiltered, low-grade fuel into a race car, right? The same logic applies here. A voltage sag is like a drop in fuel pressure, causing the engine to sputter. A voltage swell is a sudden surge that could blow a gasket. And erratic electrical flow, known as harmonics, is like sending contaminated fuel through the line, causing cumulative damage over time. Your UPS and power conditioners are the high-tech fuel filters, smoothing everything out to deliver a clean, stable, and predictable supply.

This constant power conditioning is the invisible barrier that separates a reliable facility from one plagued by mysterious and recurring hardware faults. It’s an absolutely essential layer of protection.

The Shift to Proactive Monitoring

Not too long ago, monitoring the power chain was a pretty reactive affair. An alarm would go off, and teams would scramble to fix a component that had already failed. In a modern data center, that approach is a recipe for disaster.

Today, the standard is intelligent, real-time monitoring that gives you deep visibility into every single link in the power chain. We’re talking from the moment power enters the building right down to an individual server's outlet.

This complete operational picture is pieced together by a few key systems working in concert:

- Building Management Systems (BMS): Think of these as the 30,000-foot view. A BMS keeps an eye on the entire facility's health, including the big-iron electrical gear like switchgear, generators, and the central UPS units.

- Data Center Infrastructure Management (DCIM): DCIM software drills down much deeper, focusing specifically on the IT infrastructure. It maps power and cooling resources against the actual IT load, helping operators find stranded capacity and plan for new deployments with real data.

- Intelligent PDUs: Down at the rack level, modern Power Distribution Units are the true heroes. These "smart" PDUs provide a constant stream of real-time, per-outlet data on voltage, current, and power consumption.

This firehose of data completely changes the game. Operations shift from reactive fire-fighting to proactive, strategic management.

With intelligent monitoring, data center operators can spot a degrading component or an overloaded circuit before it fails. This shifts the entire maintenance paradigm from break-fix to predictive, preventing outages and maximizing uptime.

Turning Data into Actionable Insights

Of course, collecting terabytes of data is useless if you don't do anything with it. The real value of intelligent monitoring lies in turning that data into smarter decisions that boost both reliability and efficiency. For example, tracking per-outlet power draw lets you confidently increase rack densities without just guessing and hoping you don't trip a breaker.

This becomes especially critical when managing the colossal power requirements of modern AI clusters. A single, high-density AI server can easily pull as much power as an entire rack of traditional servers from a decade ago, making precise, real-time load management a non-negotiable requirement.

The practical benefits of this approach are clear:

- Proactive Fault Detection: Catch failing components or power quality problems long before they can cause an outage.

- Energy Efficiency Optimization: Hunt down "ghost servers" that are drawing power but doing no useful work and find clear opportunities to improve your PUE (Power Usage Effectiveness).

- Accurate Capacity Planning: Use actual historical trend data to forecast future power needs, which helps avoid costly overprovisioning.

- Improved Uptime: Resolve potential issues before they ever threaten the critical IT load, directly bolstering business continuity.

Ultimately, a modern power distribution system isn’t just about moving electrons from point A to point B. It’s a smart, data-driven ecosystem designed to deliver flawlessly clean and reliable power while giving operators the visibility they need to keep it that way.

Building a Future-Proof Power Infrastructure

Designing a truly reliable data center power system is more art than science. It's a careful blend of heavy-duty electrical engineering, strategic foresight, and a deep, hands-on understanding of day-to-day operations. Every decision, from choosing the right redundancy model like N+1 or 2N to meticulously planning for future server loads, has a direct line to uptime and business continuity.

Think of it as a chain stretching from the utility pole all the way to the server rack—every single link has to be unbreakable.

Success hinges on seeing the big picture. It’s not just about bolting together switchgear, generators, and UPS units. It’s about integrating them with smart monitoring systems and ironclad safety protocols. We all learned this lesson the hard way in the early 2000s. The first internet boom caused U.S. data center electricity consumption to skyrocket by 90% between 2000 and 2005 alone. Facilities that hadn't planned for that kind of explosive growth were left scrambling.

Partnering for Resilient Growth

Today, the stakes are even higher. The relentless demands of AI and high-density computing require a whole new level of expertise to build power backbones that are both scalable and efficient. As more facilities move to advanced battery technologies for their UPS systems, managing new risks is paramount. Implementing safety solutions like fireproof lithium battery containers becomes absolutely critical for preventing issues like thermal runaway.

A truly future-proof power infrastructure is not just a collection of hardware; it is a strategically engineered ecosystem designed for resilience, efficiency, and growth. It requires a partner who understands the complete picture.

For hyperscalers, telecom carriers, and large enterprises, trying to navigate this landscape alone is a tall order. The smartest move is to bring in an experienced partner. Working with proven infrastructure experts and utility contracting companies is the surest way to build a power system that’s ready for tomorrow's demands, ensuring your facility remains a competitive asset for years to come.

Common Questions About Power Distribution

When you're dealing with something as critical as data center power, a lot of questions come up. It's a complex topic, and getting clear answers is key to making the right decisions for your infrastructure. Let's break down a few of the most frequent questions we hear from clients.

What’s the Real Difference Between a UPS and a Generator?

It’s easy to lump these two together, but they serve completely different—and equally vital—roles in keeping the lights on. The best way to think about it is like a relay race team with a sprinter and a marathon runner.

The Uninterruptible Power Supply (UPS) is your sprinter. The moment utility power flickers, it kicks in instantly, providing clean, battery-powered energy. It's not designed to run for long, maybe just a few minutes, but its job is to bridge that critical gap so your IT gear doesn't experience even a millisecond of an outage.

The generator is the marathon runner. It takes a moment to spin up and stabilize, but once it's going, it can carry the entire data center's load for hours or even days. The UPS handles the immediate crisis and then passes the baton to the generator, which takes over for the long haul. One gives you instant protection; the other provides long-term endurance. They're a team.

How Do I Choose the Right PDU for My Server Racks?

Picking the right Power Distribution Unit (PDU) for your racks is about more than just plugging things in; it's about reliability and visibility. The choice really boils down to three things: your power needs, how much monitoring you want, and the physical fit.

First, you have to do the math. Add up the total power draw for everything you plan to put in a single rack. That number will tell you the amperage and voltage you need for your PDU. You'll also need to count up the number and type of outlets required for all your servers, switches, and other gear.

Next, think about how smart you need the PDU to be:

- Basic PDUs: Think of these as heavy-duty power strips. They get the job done but offer no intelligence.

- Metered PDUs: These give you a simple digital readout of the total power being pulled through the unit, which helps you avoid overloading the circuit.

- Monitored PDUs: Now you're getting smarter. These let you see the PDU's total load remotely over the network.

- Switched PDUs: This is the top tier. You get all the monitoring capabilities, plus the ability to remotely turn individual outlets on and off. This is a lifesaver for rebooting a locked-up server without having to send someone to the data center.

For any environment where uptime is truly critical, switched PDUs are the way to go. The detailed, per-outlet data they provide is invaluable for managing capacity and spotting problems before they take you down.

Finally, make sure the PDU actually fits in your cabinet. Vertical, or 0U, PDUs are the standard choice because they mount along the side, leaving all your valuable rack space free for IT equipment.

What Does 2N Redundancy Actually Mean for Business Continuity?

When you hear engineers talk about 2N redundancy, they're describing a design with two completely independent, mirror-image power systems. Everything is duplicated, from the utility feeds and generators all the way down to the PDUs in the rack. Each of these systems—usually called the 'A' side and the 'B' side—is built to carry the entire critical IT load on its own.

From a business continuity perspective, this is the gold standard.

It means that if the entire 'A' power chain catastrophically fails—a UPS blows up, a main breaker trips, a PDU dies—it has absolutely no impact on your operations. The 'B' side is already running and sharing the load, so dual-corded servers don't even notice the failure.

A 2N design effectively eliminates your entire power infrastructure as a single point of failure. Yes, the upfront cost is nearly double that of a simpler system. But for any business where even a few minutes of downtime means significant financial or reputational harm, it's a non-negotiable investment in true resilience. It's the ultimate insurance policy against a power failure taking your business offline.

Building, upgrading, and maintaining these complex systems requires deep expertise. Southern Tier Resources provides end-to-end data center infrastructure fit-outs, from power and connectivity to structured cabling, ensuring your facility is built for reliability and scalability. Learn how our turnkey solutions can future-proof your infrastructure.